Introduction

As much as AI companies want to tout computers, algorithms, and deep learning as the solution to all our problems, the technology has a long way to go before it can offer sustainable solutions that don’t also introduce a host of other problems.

Artificial Intelligence (AI) is everywhere these days. Most of us are aware of its application in things like facial recognition software and self-driving vehicles, but few of us realize its deployment in areas like hiring processes, the detection of disabilities, and school admissions. And as much as AI companies want to tout computers, algorithms, and deep learning as the solution to all our problems, the technology has a long way to go before it can offer sustainable solutions that don’t also introduce a host of other problems.

One of the most salient places this is observed is image accessibility. Because our world is an increasingly visual one, vast amounts of information are transmitted through images and videos, often without any accompanying text or audio. Since folks who are blind, visually impaired, or have information processing differences use their senses of touch and hearing to interact with the world, their experience with this visual information is quite different than for those who rely on their sight. Imagine attending a presentation or demo where much of the information is communicated via slides…that you can’t see!

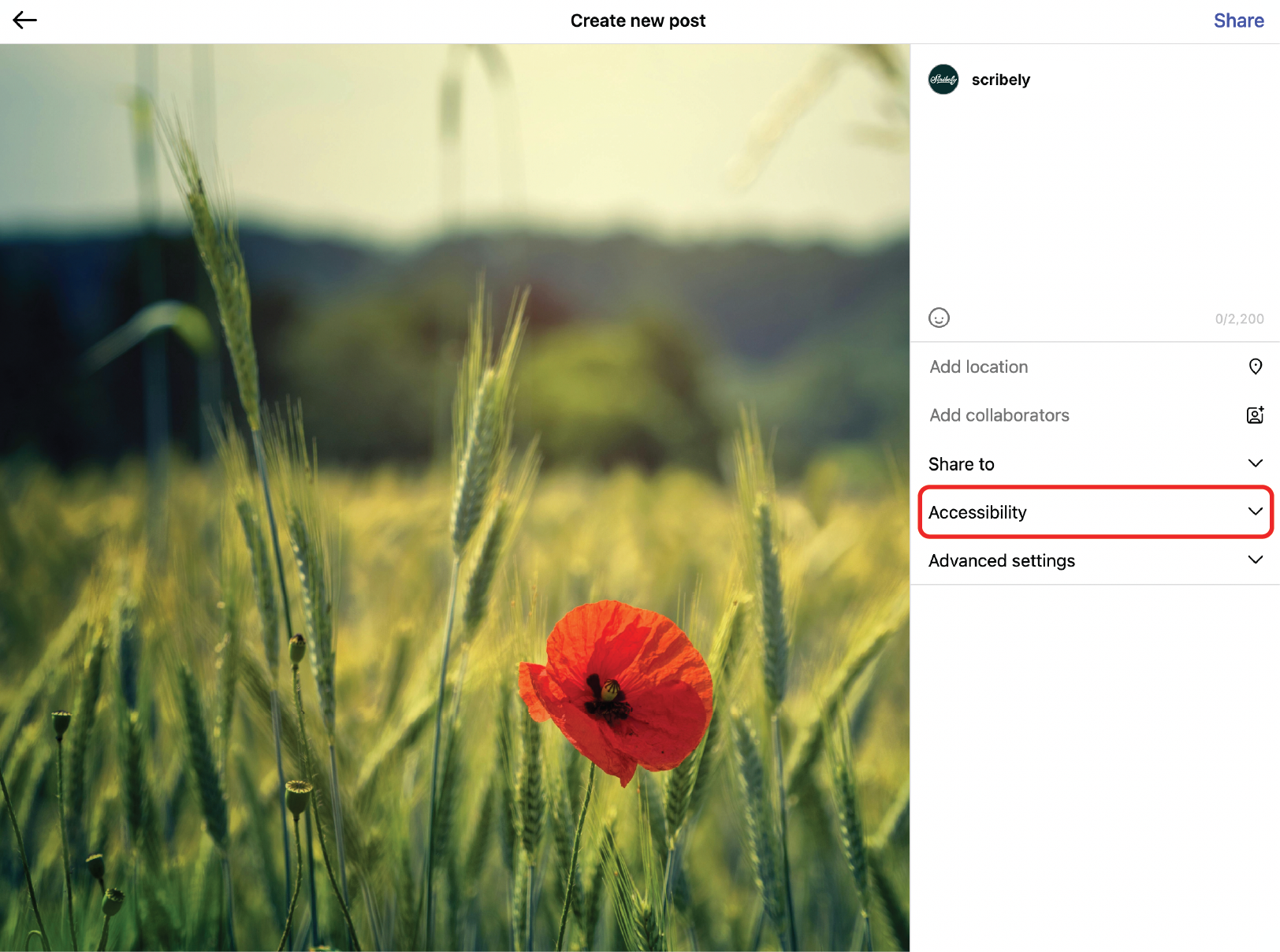

This isn’t a new problem, and more companies are stepping up to help overcome this visual bias by describing visual information and/or providing a designated place for such descriptions in their software. For example, when you place an image into Microsoft 365’s software, there is an option called “Alt Text” (short for Alternative Text), which is the current industry standard for describing images. It’s simply a brief (250 characters or fewer) written description of what’s in an image that can be read aloud by screen readers. Other major software providers like Google and Apple have built similar options into their platforms as well, and Digital Asset Management (DAM) providers like Tandem Vault are also beginning to do the same.

However, such descriptions take time—and money—especially for huge libraries of visuals, so AI “solutions” are becoming more and more common. Many of the software companies previously mentioned offer AIs that auto-generate editable Alt Text descriptions for images. Simply drop the image in, and a description appears. Accessibility without any effort and at a fraction of the cost—amazing, right?!

Well, not so fast.

As Engadget pointed out in their 2021 tech accessibility report card, “Alt text and captioning continue to be tricky accessibility features for the industry. They’re labor-intensive processes that companies tend to delegate to AI, which can result in garbled, inaccurate results.” This is because in its current stage of development, AI struggles with complicated, vague, or nuanced subject matter (for a variety of reasons, many of which are discussed from the AI Now Institute at NYU). And that struggle can lead not only to less than helpful outcomes, like misidentifying a dog as a horse, but also to horribly offensive ones, like when the Google Photos app miscategorized two Black people as gorillas in 2015.

So the idea is great in theory—save time and money!—but severely lacking in application—this helps literally no one and may even offend them. In this case, you really do get what you pay for.

Let’s look at a few examples. We’ll choose a few images to write Alt Text descriptions for, then drop them into an AI to see what its algorithm suggests…and compare!

We’ll start simple.

Human-Generated Alt Text: Low-angle view of grassy, treeless foothills leading to a jagged black peak partially shrouded by dark clouds.

AI-Generated Alt Text: A picture containing sky, outdoor, nature, mountain.

As you can see, the AI correctly identified what the picture contained…and not much else. Such a description could be applied to almost any picture of a mountain, despite being vastly different images. The AI description really doesn’t paint a clear picture, thus a person using a screen reader would miss out on a lot of important detail and, if it appeared in a database of mountain photos, would hear the same description over and over—which in turn doesn’t serve a business or brand because whatever information was intended to be conveyed is now lost.

But this is a relatively simple example with results that are annoying at worst. Let’s look at what happens with something a bit more complicated.

Human-Generated Alt Text: Large pink flowers with green leaves and thorny stems digitally manipulated into the side profile of a statue against a black background.

AI-Generated Alt Text: A close-up of a flower.

In this example, the AI didn’t even mention the color of the flowers, but more importantly, the entire point of the image was missed. Would you be happy if a search engine or an image repository put this in its results for the query “close-up of pink flower”? Or, on the other hand, if this was on an artist’s website, would this description help you grasp the true meaning of the art?

Again, the results here might be frustrating, maybe even costly for an artist, but not disastrous. When we get more complicated, though, things quickly go from aggravating to appalling.

Human-Generated Alt Text: A fashionable person with styled white hair wearing bright makeup and dark blue clothing walks in front of a brick building.

AI-Generated Alt Text: A person in a garment.

Thankfully, the AI didn’t assume the person’s gender, which is a positive, but the description is so bland, it could in fact apply to any picture of any person wearing clothes. Not only that, it doesn’t even offer a starting point upon which a human can build a better description.

This image deals with a person who doesn’t fit into gender and appearance stereotypes, but the problem isn’t limited to gender. Anything that doesn’t fit into what is programmed into an AI as “normal,” especially when it comes to humans, has a potential for being under or inaccurately described. As Thomas Smith, co-founder and CEO of Gado Images, explains, “If a human struggles to accurately describe an image, then a machine will almost certainly fare worse” (Medium).

As we’ve seen, bad Alt Text has many negative impacts: people are offended and thus view a brand negatively; blind and visually impaired people are misled about what’s contained in visual content; search queries yield inaccurate results; and many more. So let’s address the question we’re all asking. If AI isn’t the solution we need and human-powered solutions require a larger monetary investment, what can we do? The answer is two-fold.

First, in order to deal with the backlog of visual information already on the Internet, a hybrid approach may strike the balance. This could involve utilizing AIs to generate initial descriptions that humans then review and improve if necessary. Alternatively, AI could be utilized for object recognition, which in turn would allow writers to more quickly describe large collections of similar images. And second, we must prioritize implementing human-generated descriptions at the source for all new content moving forward. This will require Alt Text training and/or outsourcing the job to a company that specializes in internet accessibility.

Ultimately, the reality of our modern digital age is that competitive advantage is no longer found through creating amazing content, but rather in making that amazing content accessible to all. Thankfully, human-scaling alt text is not impossible, and devoting care and attention to accessibility is a huge opportunity for brands and businesses. How will you innovatively harness it?

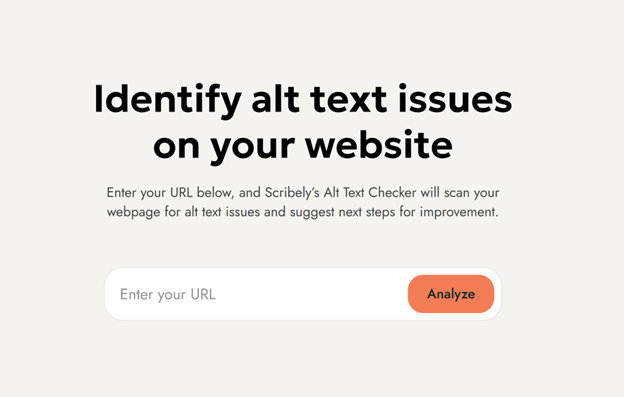

Interested in learning more about how the experts at Scribely can help you meet your accessibility goals? Contact us today for more information!

Check out Scribely's 2024 eCommerce Report

Gain valuable insights into the state of accessibility for online shoppers and discover untapped potential for your business.

Read the ReportCite this Post

If you found this guide helpful, feel free to share it with your team or link back to this page to help others understand the importance of website accessibility.

.jpg)

.jpg)

_edited_6x4-p-1080.jpeg)